Public relations professionals face a reckoning. As artificial intelligence tools become standard equipment in our industry—from content generation to media monitoring—the question is no longer whether to use AI, but how to use it responsibly. The stakes are high: client trust, professional credibility, and legal compliance all hang in the balance. Recent updates to professional codes of ethics from PRSA, IPRA, and the Global Alliance signal that the industry has moved past experimentation into a phase demanding rigorous standards, transparent practices, and accountable governance.

PR Overview

Disclosure Requirements: When and How to Reveal AI Use

Transparency starts with disclosure, but knowing when and how to disclose AI involvement requires judgment and clear protocols. PRSA’s 2025 AI Ethics Guidelines establish that disclosure is required when AI significantly influences outcomes, particularly in client deliverables. This means if an AI tool drafts a press release, generates social media content, creates visual assets, or assists in hiring decisions, stakeholders deserve to know.

The practical application matters more than abstract principles. When AI generates content that could influence public opinion, clear labeling becomes non-negotiable. PRSA’s updated code mandates annual training on AI tool limitations and requires explicit client disclosure for AI-generated materials. This isn’t about adding a small disclaimer in fine print—it’s about upfront, honest communication that respects the intelligence of your audience and clients.

Documentation serves as your professional insurance policy. PRSA recommends maintaining records of significant AI use, especially in public-facing work. This creates an audit trail that protects both your organization and your clients. The guidelines provide templates for integrating transparency into daily workflows, making disclosure a routine practice rather than an afterthought.

Consider the contract phase as your first opportunity for transparency. IPRA’s ethical standards emphasize disclosing AI use in communications upfront, before work begins. This prevents misunderstandings and establishes clear expectations. When clients understand how AI tools support your work—and where human expertise remains irreplaceable—trust deepens rather than erodes.

Privacy concerns add another layer to disclosure requirements. PRSA cautions against using public AI tools for sensitive client information, as data privacy risks multiply when proprietary information enters third-party systems. Your disclosure protocols should address not just what AI does, but how client data remains protected throughout the process.

Governance Policies: Building Frameworks for Responsible AI Use

Strong governance transforms ethical intentions into operational reality. The Global Alliance’s 2025 Venice Pledge outlines governance models that prioritize human-led oversight aligned with public interest. These frameworks must address privacy protection, misinformation prevention, intellectual property rights, security protocols, bias mitigation, and the limits of autonomous decision-making.

Human oversight represents the cornerstone of ethical AI governance. PRSA explicitly warns against AI platforms that replace human judgment, insisting that final decision-making authority must rest with trained professionals. This principle protects against the most dangerous AI failure mode: the abdication of professional responsibility to algorithmic outputs.

Your governance structure should include regular AI audits. The PR Council recommends conducting systematic reviews of AI tool usage, assessing both compliance with ethical standards and alignment with organizational values. These audits identify gaps before they become crises, allowing proactive policy updates rather than reactive damage control.

Vendor transparency deserves scrutiny within your governance framework. PRSA’s guidelines stress the importance of understanding how AI vendors operate, what data they collect, and how their algorithms make decisions. If a vendor cannot explain their system’s logic or data handling practices, that’s a red flag demanding attention.

Training programs ensure governance policies translate into team competency. Annual education on AI limitations, bias awareness, and responsible data handling keeps your staff current as technology and regulations change. This investment in capacity building pays dividends in risk reduction and quality improvement.

Policy documentation provides clarity and accountability. Written guidelines that specify when AI use is appropriate, what approval processes apply, and how to handle edge cases remove ambiguity. These documents also demonstrate due diligence should legal questions arise.

Bias Mitigation: Identifying and Correcting AI Failures

AI systems inherit biases from their training data, making bias detection and mitigation critical competencies for PR professionals. Regular bias audits using diverse datasets help identify problematic patterns before they reach your audience. Tools like IBM’s AI Fairness 360 toolkit provide structured approaches to measuring and addressing algorithmic bias.

Anti-hallucination protocols protect against AI’s tendency to generate plausible-sounding falsehoods. Every AI output requires fact-checking by qualified humans. Treating AI-generated content as a starting point rather than a finished product prevents the spread of misinformation that could damage your clients and your reputation.

Watermarking synthetic media addresses a specific bias risk: the potential for AI-generated images, audio, or video to mislead audiences. Clear labeling of synthetic content maintains transparency and prevents deceptive practices, even when the content itself is accurate.

Continuous monitoring catches bias that emerges over time. AI systems can drift as they process new data or as societal contexts shift. Regular reviews of AI outputs across different demographic segments, topics, and formats reveal patterns that might otherwise go unnoticed.

Team literacy in bias detection multiplies your organization’s defensive capabilities. Training PR professionals to recognize common AI failure modes—stereotyping, omission of perspectives, overconfidence in uncertain outputs—creates multiple checkpoints before content reaches the public.

Diverse datasets improve AI performance, but they require intentional effort to assemble. When training or fine-tuning AI tools, ensure your data sources represent the full spectrum of perspectives and experiences relevant to your work. Homogeneous training data produces homogeneous, often biased, outputs.

Client Expectations: Meeting Professional Standards

Clients expect honesty, accuracy, and accountability—expectations that extend to AI use. The Global Alliance emphasizes that ethical AI practices must adhere to professional codes of ethics, focusing on fairness and accuracy in all communications. When clients understand how AI supports your work without compromising these values, confidence grows.

Contract transparency sets the foundation for meeting client expectations. IPRA recommends disclosing AI use in initial agreements, specifying which tasks may involve AI assistance and where human expertise drives strategy and execution. This clarity prevents disputes and aligns expectations from the start.

Case examples demonstrate how transparency builds rather than undermines client relationships. PRSA’s 2025 guidelines include scenarios showing how to communicate AI involvement in ways that highlight efficiency gains while reassuring clients about quality control and strategic oversight. These conversations position AI as a tool that amplifies human expertise rather than replacing it.

Accountability mechanisms reassure clients that someone remains responsible for outcomes. Clear lines of authority—who reviews AI outputs, who approves final deliverables, who responds when problems arise—provide the structure clients need to feel secure in the relationship.

Professional integrity demands that we guide clients toward ethical AI use, even when they might prefer shortcuts. Your role includes educating clients about risks, recommending best practices, and sometimes declining work that would compromise ethical standards. This advisory function protects both parties from reputational and legal harm.

Compliance with Global AI Laws: Managing Legal Risk

The regulatory environment for AI continues to shift, making ongoing education necessary for compliance. IPRA’s ethical standards summarize international AI regulations and recommend that PR professionals manage legal risks through documentation, accountability systems, and continuous learning about evolving requirements.

Documentation creates the evidence trail regulators may request. Records of AI use, decision-making processes, bias audits, and corrective actions demonstrate good faith efforts to comply with ethical and legal standards. This paper trail becomes invaluable if your organization faces scrutiny.

The Global Alliance framework aligns with emerging global AI laws by emphasizing capacity building and advocacy. Staying informed about regulatory developments in the jurisdictions where you operate—and where your clients operate—prevents costly violations and positions you as a trusted advisor on compliance matters.

Regular policy updates keep your organization ahead of regulatory changes. The PR Council advises implementing systematic reviews of internal policies, updating them as new laws take effect and as industry best practices develop. This proactive approach beats scrambling to comply after regulations land.

Legal counsel should review your AI governance policies. While PR professionals understand communication ethics, attorneys understand regulatory compliance. Collaboration between these disciplines produces policies that satisfy both ethical and legal requirements.

Risk management extends beyond compliance to reputation protection. Even in jurisdictions without specific AI regulations, ethical lapses can trigger public backlash, client defections, and professional sanctions. The highest standard—not the minimum legal requirement—should guide your AI practices.

The integration of AI into public relations work demands more than technical proficiency. It requires ethical clarity, transparent practices, robust governance, and unwavering commitment to professional standards. The frameworks provided by PRSA, IPRA, the Global Alliance, and the PR Council offer concrete guidance for navigating this complex terrain. Start by auditing your current AI use, documenting your practices, and identifying gaps in your disclosure protocols and governance policies. Invest in training that builds your team’s capacity to detect bias and misinformation. Engage clients in honest conversations about AI’s role in your work. Stay current on regulatory developments that affect your practice. These steps transform ethical AI use from an aspiration into operational reality, protecting your clients, your organization, and the integrity of the profession itself.

Ethical AI in PR: New Standards for Transparency and Compliance

Public relations professionals face a reckoning. As artificial intelligence tools become standard...

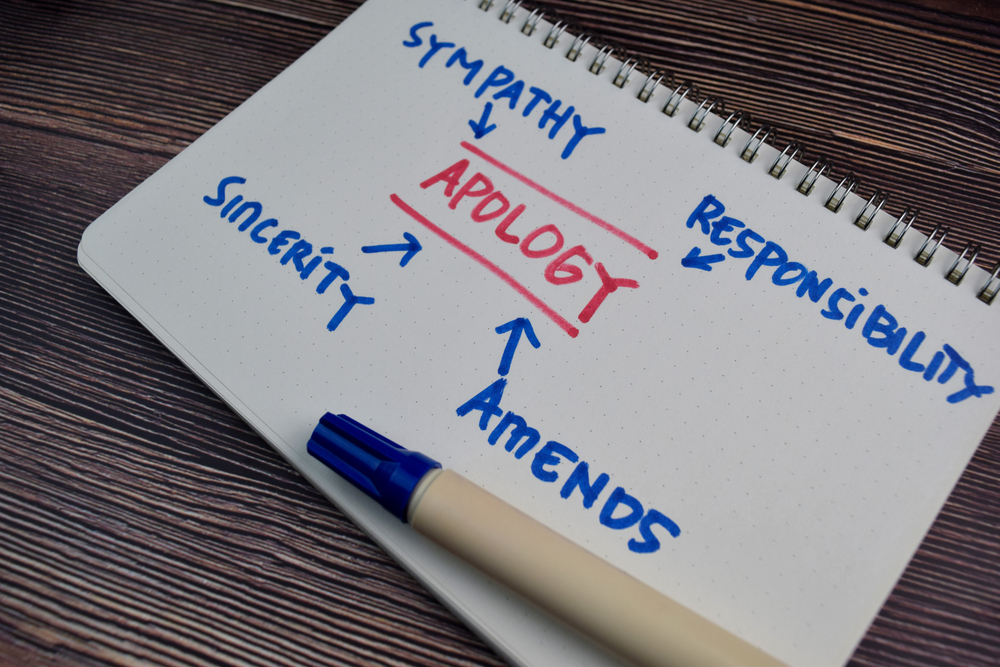

How to Apologize Publicly with Effective Apology Strategies

Public apologies have become a defining feature of modern reputation management. When public...

Employee Advocacy for Health Tech Companies That Drives Results

Your employees already talk about their work. The question is whether they're amplifying your...